分布式存储网络隔离

为提升存储性能,存储集群的网络可以通过配置 Ceph 来提供一个指定的网络让 OSD 进行通信。与使用单个网络相比,会大大提高存储集群的性能。

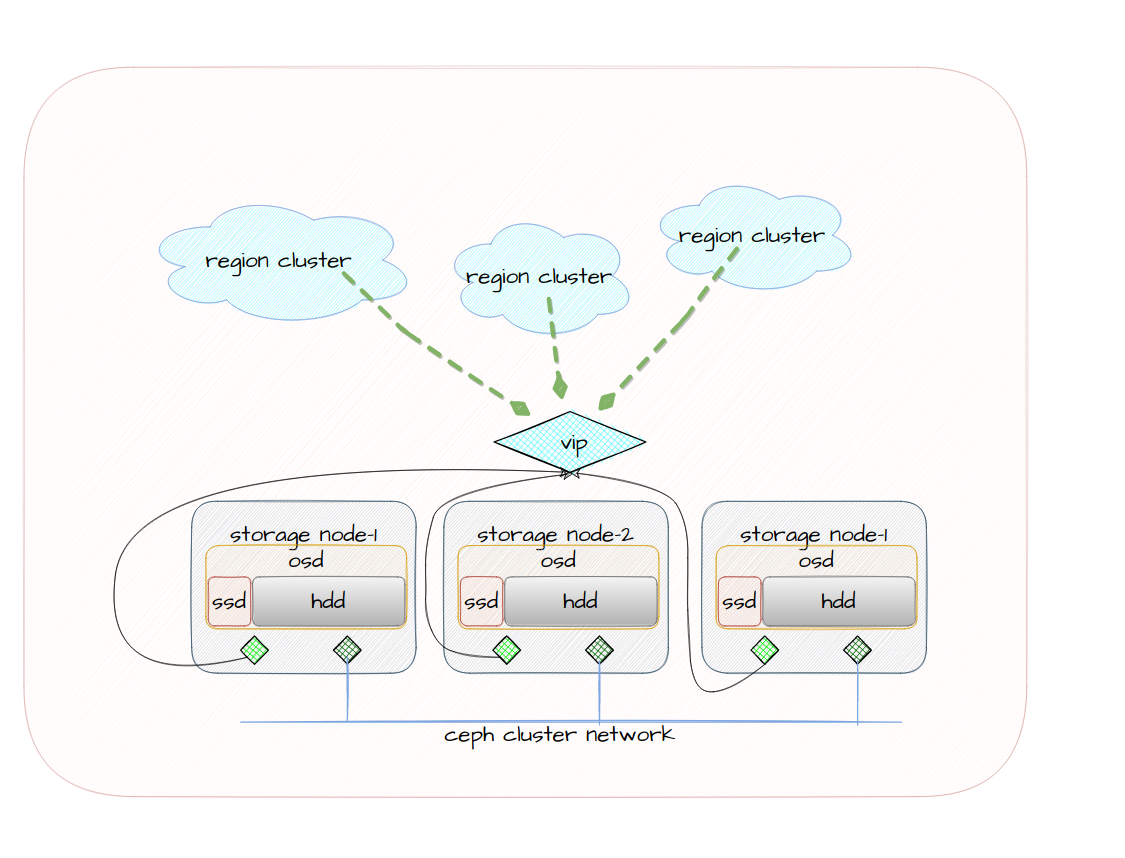

集群网络架构图

操作步骤

-

为配置网络隔离,您需查看集群的网络环境,本示例集群的网络环境信息如下表:

public ip private ip 192.168.131.27/20192.168.144.127/22192.168.131.28/20192.168.144.128/22192.168.131.26/20192.168.144.126/22 -

页面部署分布式存储时,在 部署 Operator 之后 创建集群 之前,您需要在集群的 master 节点上执行以下命令:

kubectl -n rook-ceph edit configmap rook-config-override -

在

config字段中,添加以下信息。提示:网段参数需要根据具体环境情况修改,

public network和cluster network参数需为网络环境中同一网段的首个地址。public network = 192.168.128.0/20 cluster network = 192.168.144.0/22 public addr = "" cluster addr = ""示例修改结果如下:

apiVersion: v1 data: config: |- [global] public network = 192.168.128.0/20 cluster network = 192.168.144.0/22 public addr = "" cluster addr = "" mon_memory_target=1073741824 mds_cache_memory_limit=2147483648 osd_memory_target=2147483648 kind: ConfigMap metadata: annotations: cpaas.io/creator: admin@cpaas.io cpaas.io/updated-at: "2021-09-23T13:19:45Z" creationTimestamp: "2021-09-23T13:17:50Z" name: rook-config-override namespace: rook-ceph resourceVersion: "259588" selfLink: /api/v1/namespaces/rook-ceph/configmaps/rook-config-override uid: 3d26f0f5-30f3-4ed2-8ec5-73d1d11bcd3c -

在创建分布式存储页面继续 创建 Ceph 集群 即可。

-

验证网络是否配置成功。

-

检查 ceph 机器状态,

HEALTH_OK表示集群健康,执行以下命令:ceph -c ceph.config -s示例输出:

cluster: id: 77a638ee-dab8-440f-8ca6-7d1c80d9f52b health: HEALTH_OK services: mon: 3 daemons, quorum a,b,c (age 31m) mgr: a(active, since 28m) osd: 3 osds: 3 up (since 30m), 3 in (since 30m) data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 3.0 GiB used, 57 GiB / 60 GiB avail pgs: -

使用命令查看 osd 配置,示例输出的最下方将标记每个 osd 使用 ip 的情况,本例中

192.168.131.27和192.168.144.127是配置的两个网段中的 ip,执行以下命令:ceph -c ceph.config osd dump示例输出:

epoch 9 fsid 77a638ee-dab8-440f-8ca6-7d1c80d9f52b created 2021-09-23 13:20:27.023887 modified 2021-09-23 13:21:56.629467 flags sortbitwise,recovery_deletes,purged_snapdirs,pglog_hardlimit crush_version 5 full_ratio 0.95 backfillfull_ratio 0.9 nearfull_ratio 0.85 require_min_compat_client jewel min_compat_client jewel require_osd_release nautilus max_osd 3 osd.0 up in weight 1 up_from 7 up_thru 0 down_at 0 last_clean_interval [0,0) [v2:192.168.131.27:6800/23085,v1:192.168.131.27:6801/23085] [v2:192.168.144.127:6800/23085,v1:192.168.144.127:6801/23085] exists,up 08e1474e-8623-4368-b833-0b77635a99c6 osd.1 up in weight 1 up_from 9 up_thru 0 down_at 0 last_clean_interval [0,0) [v2:192.168.131.28:6800/20574,v1:192.168.131.28:6801/20574] [v2:192.168.144.128:6800/20574,v1:192.168.144.128:6801/20574] exists,up 315de735-7b79-4cf1-b660-67b4e1a39862 osd.2 up in weight 1 up_from 9 up_thru 0 down_at 0 last_clean_interval [0,0) [v2:192.168.131.26:6802/16942,v1:192.168.131.26:6803/16942] [v2:192.168.144.126:6800/16942,v1:192.168.144.126:6801/16942] exists,up ed6267fb-d3b3-4f86-8d53-3543ea115297 -

检查配置文件,确保修改的配置生效,执行以下命令:

cat /var/lib/rook/rook-ceph/rook-ceph.config示例输出:

[global] fsid = 77a638ee-dab8-440f-8ca6-7d1c80d9f52b mon initial members = c a b mon host = [v2:192.168.131.28:3300,v1:192.168.131.28:6789],[v2:192.168.131.27:3300,v1:192.168.131.27:6789],[v2:192.168.131.26:3300,v1:192.168.131.26:6789] public addr = cluster addr = public network = 192.168.128.0/20 cluster network = 192.168.144.0/22 mon_memory_target = 1073741824 mds_cache_memory_limit = 2147483648 osd_memory_target = 2147483648 -

检查端口监听状态,可以看到示例中

192.168.144.126这个 ip 上的端口,就是当前节点 Ceph 内部服务正在监听的端口,执行以下命令:netstat -vatn | grep LISTEN | grep 680示例输出:

tcp 0 0 192.168.131.26:6800 0.0.0.0:* LISTEN tcp 0 0 192.168.144.126:6800 0.0.0.0:* LISTEN tcp 0 0 192.168.131.26:6801 0.0.0.0:* LISTEN tcp 0 0 192.168.144.126:6801 0.0.0.0:* LISTEN tcp 0 0 192.168.144.126:6802 0.0.0.0:* LISTEN tcp 0 0 192.168.131.26:6802 0.0.0.0:* LISTEN tcp 0 0 192.168.144.126:6803 0.0.0.0:* LISTEN tcp 0 0 192.168.131.26:6803 0.0.0.0:* LISTEN tcp 0 0 192.168.131.26:6804 0.0.0.0:* LISTEN tcp 0 0 192.168.131.26:6805 0.0.0.0:* LISTEN

-